It comes as no surprise that public health authorities are increasingly concerned about the potentially dire consequences of vaccine misinformation making its rounds to billions of individuals via social media. Indeed, one recent randomized controlled trial found that exposure to vaccine-related misinformation on one platform reduced people’s intentions to get immunized by over 6% both in the UK and the US. A further study, conducted by the UK Royal Society for Public Health, found that roughly 50% of parents with children under the age of 5 get exposed to anti-vaccine content on Facebook – a trend whose consequences have yet to be quantified.

Given social media’s power to seed doubts about the safety of COVID-19 vaccination and thus delay global immunization efforts, some medical and health practitioners have raised uncomfortable questions about whether the act of sharing misleading information should be subject to criminalization.

Such an approach may be unproductive, as it raises a host of downstream questions about what qualifies as misinformation. Should we censor and penalize a mother complaining of her formerly healthy child suddenly coming down with seizures within weeks of getting vaccinated, misleading as her anti-vaccine interpretations of the events might be? While behavioral scientists like ourselves recognize the benefits that might come out of deploying interventions aimed at nudging people away from sharing misinformation, we also recognize that focusing on the individual level risks overlooking a much bigger underlying factor: the fabric of social media itself. As suggested by the latest evidence, some social media platforms are practically designed to be virtual petri-dishes for the growth of echochambers, where misinformation runs amok and deepens pre-existing biases to potentially dangerous levels.

This inference emerges from a recent study in which scientists performed a technical analysis of over 100 million social media interactions across four popular global platforms – Facebook, Twitter, Reddit, and Gab. Their results, published in last month’s issue of the journal PNAS, paint a thought-provoking picture of the world’s misinformation problem. In it, the primary culprits behind the spread of dangerous information are not necessarily the human beings using social media – but rather the algorithms used to craft the platforms’ news feeds.

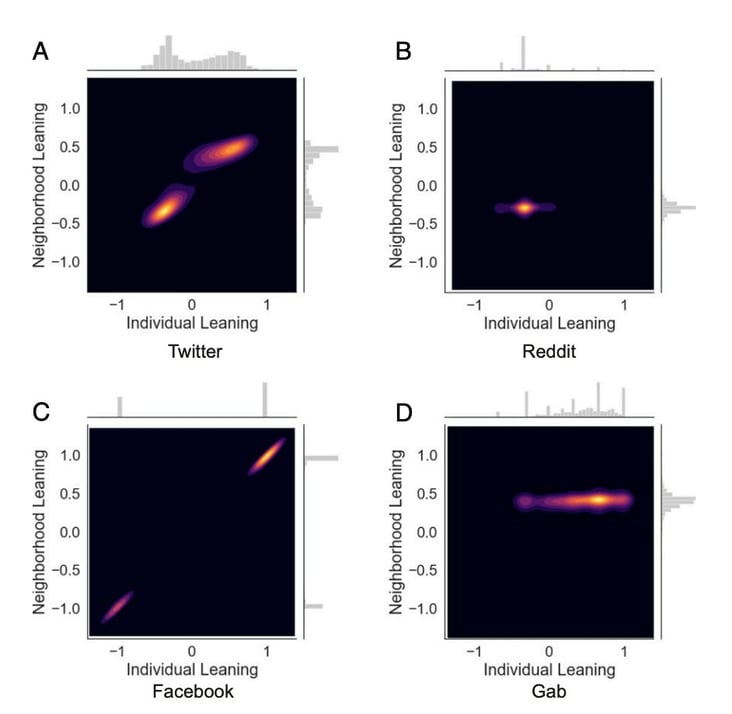

The researchers behind this study quantified each social media platform’s echochamber-iness by assessing the homophily of social interactions (from the Ancient Greek prefix ‘homo’ – same, identical, and ‘philie’ – love). For each social media platform user included in the analysis, they used data on the content they shared or endorsed through actions such as re-tweets, likes, and upvotes to compute a vector of leanings on a range of issues including vaccination, quasi-scientific conspiracies, abortion rights, gun control, and controversial politics. Each user was then considered connected to a range of other users based on who they followed, befriended, or interacted with by commenting on the same posts. Using the average leaning of these social connections the researchers could now quantify the degree to which each user found themselves inside an echochamber dominated by the same set of opinions and beliefs.

What they found – perhaps unsurprisingly – is that all platforms contain users who are evidently cocooned in a web of peers sharing their same beliefs. But some platforms are significantly worse offenders. Twitter and Facebook, in particular, exhibited high degrees of echochamber-iness and polarization, illustrated in the plot below. That is, individuals with strong negative leanings on some issues (e.g., vaccines and abortion) had social neighbourhoods that were almost exclusively composed of individuals with similarly negative leanings, while those with different viewpoints formed entirely parallel communities that never overlapped.

This clustering and polarization of the opinion space did not take place on Reddit and Gab. There, users appeared to hail from narrower political spectrums than found on Facebook and Twitter (with Reddit and Gab mapping quite neatly onto the center-left and right political wings respectively). Nonetheless, users were exposed to a significant spectrum of opinion surrounding the average for each platform, implying that the echochamber-factor wasn’t really there.

The fact that social media platform can make such a tangible difference to an individual’s likelihood of ending up in an echochamber is, in certain ways, surprising. A great deal of scientific evidence tells us that human beings are naturally predisposed to consume and supply biased information, which logically implies that our desire to seek out echochambers should be unconditional of the platform that we use. For instance, most of us exhibit a robust confirmation bias: i.e., a tendency to seek out and selectively remember information that adheres to our pre-existing opinions and beliefs. We are also grossly incompetent at judging the veracity of information to which we are exposed. The scientific literature is packed with examples of humans falling prey to a variety of truth illusions, such as our inclination to perceive statements to be true purely because we have heard them being said a greater number of times, or because it was delivered by someone with an easily remembered name. Add to this the fact that virtually all social media platforms reinforce our biases by offering the opportunity for our shares and comments to get likes, re-tweets, and upvotes, and it seems that echochambers and misinformation should be rife wherever we look.

As it turns out, this isn’t quite the case. The researchers behind this recent PNAS study suggest that the exaggerated echochamber-factor of Facebook and Twitter may not be a coincidence – but rather a function of the fundamental mechanisms these platforms use to provide content to their users. While platforms like Reddit give people the opportunity to cluster into interest-based sub-reddits and to promote content by popular up-voting, Facebook and Twitter hand content promotion into the hands of algorithms that ensure individuals are fed more of what they already consume. Thus, a recommendation tool allegedly designed to enhance user enjoyment actually serves to create a virtual closed arena where pre-existing opinions can only bounce off walls padded with like-minded content and grow ever-more extreme.

Establishing a data-driven awareness of the mechanisms of echochamber formation and misinformation is crucial in an era where false content represents a danger to society and public health. Thus, while some might debate the criminalization of misinformation sharing, we as behavioral scientists believe that tackling the issue requires us to look at the wider choice architecture in which these actions ultimately play out. Knowing what the evidence tells us about the power that platforms’ content feeding algorithms have to propagate and reinforce toxic viewpoints, legislators may need to consider broader interventions, and not shy away from regulations.

Establishing a data-driven awareness of the mechanisms of echochamber formation and misinformation is crucial in an era where false content represents a danger to society and public health. Thus, while some might debate the criminalization of misinformation sharing, we as behavioral scientists believe that tackling the issue requires us to look at the wider choice architecture in which these actions ultimately play out. Knowing what the evidence tells us about the power that platforms’ content feeding algorithms have to propagate and reinforce toxic viewpoints, legislators may need to consider broader interventions, and not shy away from regulations.

At the same time, it is worth exploring other human-centered tactics that are rooted in a greater sense of foresight. This is because, while social media platforms and echochambers are likely here to stay for the decades to come, the susceptible human mentality doesn’t have to do the same. The global community can only benefit from renewing its approach to scientific education and the cultivation of critical thinking. Thus, when we eventually find ourselves inside an echochamber – whether it is built on the basis of own psychological biases or a content feeding algorithm – we have been immunized against its potential dangers by a well-developed and critical sense of scientific thinking.

Reference

Cinelli, M., Morales, G. D. F., Galeazzi, A. …& Starnini, M (2021). The echo chamber effect on social media. Proceedings in the National Academy of Sciences, 118: 9.